A compelling film pitch is the art of bringing a vision to life. But what happens when you want to present a key scene or even a “trailer” for a film that doesn’t exist yet? What about when you are looking for a non-technical or cost-free solution for an AI storyboard generator and find none?

If you are in my position as an indie filmmaker – someone who lacks extensive art design skills, a hefty budget for visual development, or the advanced prompt engineering expertise often required for cutting-edge AI creative tools (i.e. RunwayML, Midjourney, DALL-E 2, Stable Diffusion) – you might feel your words are your only true asset. Fortunately, today, these words can be powerfully amplified by AI Large Language Models (LLMs) like Gemini and ChatGPT. But how do you genuinely transform them into a compelling visual pitch?

Compounding this creative challenge is the ever-present distribution concern: “What happens next after I finish the film?” This often becomes a demotivating mantra, capable of sparking the perfect creative blockage. For the past three years, this exact dilemma prompted me to put my filmmaking dream on hold, embarking on a PhD journey specifically to uncover practical solutions that could reignite my creative joy.

Focusing on the core creative hurdle, I reframed my question: “How can I transform my words into an AI storyboard generator?” My transdisciplinary research culminated in a methodology I call the semantic filmmaking framework (semantic storytelling). This innovative film production framework allowed me to craft powerful visual narratives not only for a film that exists solely as a concept in my mind, but for any type of media content, ensuring both visual coherence and complete creative control.

The Indie Filmmaker’s Visual Conundrum

The traditional path to visualizing a film idea – hiring concept artists, illustrators, or even animating full storyboards – is often a costly, time-consuming, and resource-intensive endeavor. For independent creators, these barriers can be insurmountable, forcing us to rely on verbal descriptions or rudimentary sketches to convey complex cinematic visions.

While AI offers incredible potential, mastering advanced prompt engineering for highly specific visual outputs can feel like another skill barrier. My goal was to bypass these limitations, finding a way to democratize high-fidelity visual pitching without compromising on artistic integrity or precise creative direction.

Semantic Filmmaking: My Method for AI-Powered Visuals

My solution was to approach the storyboard process with a structured, language-first philosophy. Through semantic filmmaking, I developed a method to translate my exact vision into detailed, actionable text prompts. For a key scene from my film idea, I meticulously crafted 10 distinct visual panels, each generated solely from these textual descriptions. Each description was carefully constructed to include:

- Precise Composition: Defining camera angles, shot types (e.g., extreme close-up, wide shot), and specific camera movements (e.g., slow dolly zoom, rapid pan).

- Character Nuances: Capturing expressions, subtle actions, and precise positioning within the frame.

- Detailed Setting & Props: Specifying environmental details, key props, character costume, intricate textures, and the overall mood-setting ambiance.

- Overall Visual Style: Dictating the desired color palette, lighting (e.g., chiaroscuro, naturalistic), and the emotional atmosphere of the scene.

By feeding these structured textual prompts into Gemini (free version), specifically, leveraging the image generation capabilities of Gemini AI, my words became the ideal AI storyboard generator I needed. I was able to produce a series of visually coherent and highly specific frames that mirrored my internal vision with remarkable accuracy.

Unlocking Unprecedented Creative Control and Coherence

One of the most transformative aspects of this AI-powered, semantic approach was the unprecedented level of creative control it afforded. Without wasting time with much refining of the textual prompts, I could iterate rapidly, making precise adjustments to the visual output without the delays or costs associated with traditional illustration. The AI became an intelligent extension of my mind’s eye, translating nuanced semantic instructions into tangible visuals.

This ensured a consistent visual coherence across all panels, maintaining a unified aesthetic and tone – a critical factor for a convincing pitch. My specific visual vocabulary, embedded in the structured text, guided the AI reliably, fostering an efficient feedback loop that streamlined the entire pre-production visualization phase. It essentially became a structured vocabulary of my film’s emotional architecture.

The Ultimate Pitch: A Trailer for a Film That Doesn’t Exist

The culmination of this innovative process was the assembly of these 10 distinct visual panels into a short, cohesive video. Thanks to their inherent semantic coherence, the individual frames transitioned seamlessly, creating a fluid and impactful narrative.

This video is far more than just an animated storyboard; it functions as a compelling key scene from the unmade film, serving as an effective trailer/teaser/pitch that powerfully demonstrates the narrative and aesthetic potential of the idea. It allows stakeholders to truly see the film, feel its tone, and grasp its visual language, long before any actual production takes place. Or, even before the script is written. This approach transforms abstract concepts into concrete, tangible visual proof of concept.

Beyond the Board: Semantic Filmmaking’s Broader Impact

Semantic technologies and principles have been integral to the internet’s evolution, with major streaming platforms like Netflix and Amazon already leveraging them for content enrichment and amplified discovery in the pre-distribution phase. Yet, for many filmmakers, their application has remained overly complex. The film industry’s traditional leanings meant a slower embrace of digital literacy, leaving us now to urgently find practical solutions for our three biggest challenges:

- Making the film: Establishing a faster and cost-effective production process that enhances creativity;

- Understanding audiences: forging strong connections with our film’s target audiences;

- Making the film seen: navigating the complexities of digital distribution for amplified visibility and discovery.

In the digital era we must create with semantic processing in mind.

Connecting with Audiences, Human and Machine: The ‘Kailasa is Calling’ Case Study

My answer to these systemic challenges within the traditional supply chain model, one that also ensures my comfort and creative freedom as a filmmaker by not forcing me into the roles of technologist or digital marketer, is the semantic film production framework.

This methodology, painstakingly developed through extensive research, is designed to achieve a crucial dual understanding: it helps a film resonate powerfully with its human audiences and become optimally comprehensible for machines (algorithms). This dual understanding is key to unlocking discoverability and connection.

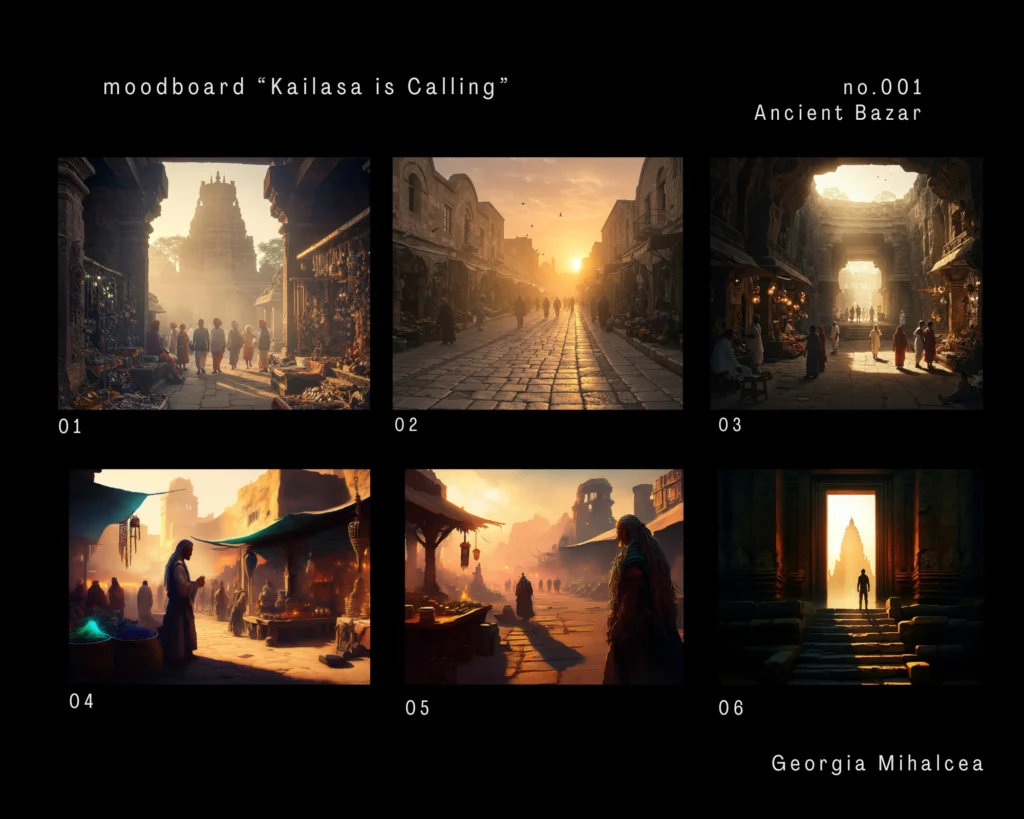

Below, I’ll demonstrate a piece of my process through the case study ‘Kailasa is Calling’, offering a visual insight into how this framework empowers creators to craft compelling visual narratives and forge direct connections between their films and relevant audiences.

Step 1: Turn a typical script scene into a semantic scene

A traditional script functions as a semi-structured linear narrative. However, the algorithmic mindset, upon which AI creative tools operate, thrives on explicit, precise, and highly structured data. For effective human-computer communication – the key to unlocking AI’s creative potential – precision is paramount.

Think of it in the same way you direct your crew and cast: your creative notes and specific directives are crucial for realizing your cinematic vision. The beauty of the semantic approach is that we no longer keep these valuable insights archived; instead, we use them to help AI understand.

Here’s how to begin this transformation, keeping in mind that even with a less-than-religious adherence to every detail in this case study, the results far exceeded my expectations.

The Script Scene: Pre-Internet Era

EXT. ANCIENT BAZAR – DAWN

The bazar comes to life, bathed in the soft light of dawn.

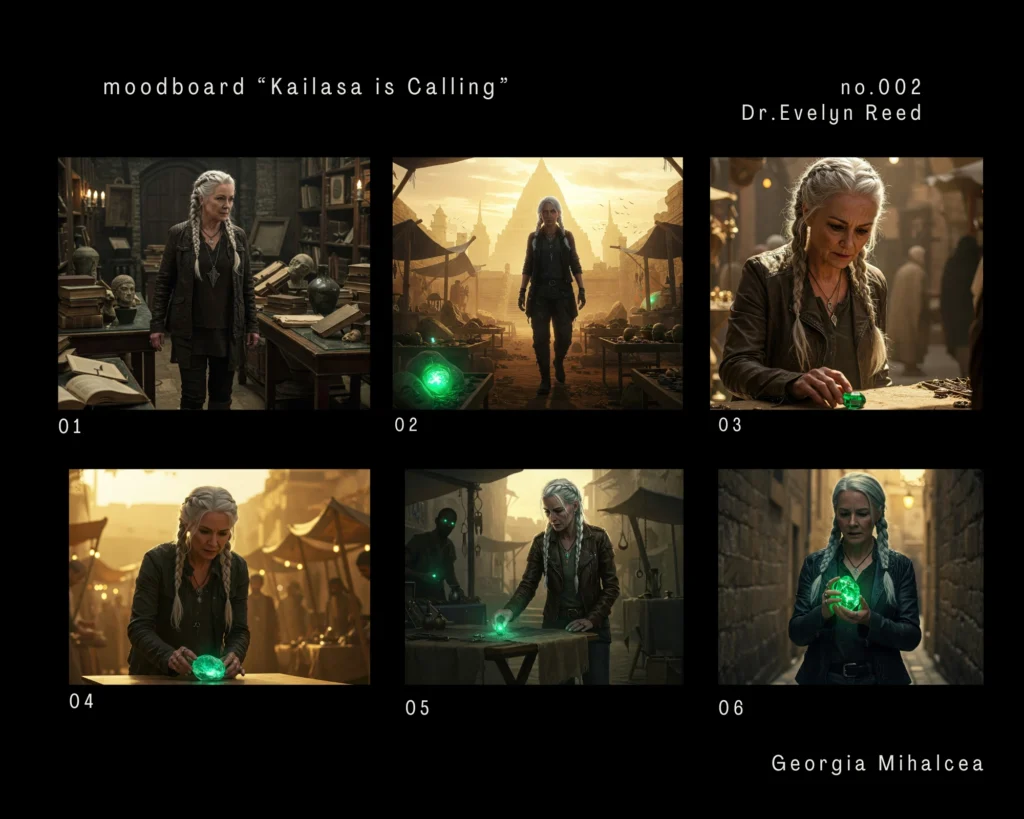

Silhouettes of people move in the background, but attention gathers around DR. EVELYN REED, who stands in front of a stall covered with a piece of worn cloth.

She carefully examines a strange artifact, a smooth, emerald-green stone that glows faintly.

A VENDOR watches her curiously from a distance.

A sense of mystery hangs in the air, mixed with the sounds of the market coming to life and a distant, almost hypnotic chant.

Dr. Reed (V.O.)

What could it be?

VENDOR

Amulets! Powerful amulets for luck and protection!

The Semantic Scene: Digital Era

This is a way to “translate” the scene into a structure, by integrating my directing and creative notes, to make it clear and ready for semantic processing:

- Scene: Ancient Bazar – Sunrise

- Description: Dr. Reed discovers an artifact in the ancient bazar.

- Settings:

- Location: Ancient bazar (exterior)

- Place: Kailasa Temple, India

- Time of day: Sunrise

- Atmosphere: mysterious, eerie

- Symbolism: witness to history and culture, a new beginning, the unknown

- Characters:

- Principal: Dr. Evelyn Reed

- Profession: researcher, explorer, anthropologist

- Personality: curious, determined, intelligent

- Film Narrative Arc: From skepticism to discovery and fascination

- Scene Narrative Arc: Begins with curiosity and doubt, becomes intrigued and interested in power and mystery

- Details: female, white, middle-aged

- Costume: nomad, traveler, ethnic-historical, practical

- Hair: long, graying hair, braided

- Makeup: natural

- Relationships:

- with Seller: unknown, suspicious

- Secondary: Vendor

- Profession: Seller of amulets and artifacts

- Personality: mysterious, calculated

- Film Narrative Arc: From a simple vendor to an intermediary with hidden powers

- Scene Narrative Arc: From an enigmatic presence to a skilled negotiator with a hidden history

- Details: male, short stature, ageless

- Costume: Ethnic, oriental

- Hair: Black

- Makeup: Natural

- Relationships:

- with Dr. Reed: Unknown, potentially opportunistic

- Extras: Silhouettes of people in the background, passers-by

- Principal: Dr. Evelyn Reed

- Action:

- Dr. Reed examines a strange artifact – a faintly glowing green stone.

- Mise-en-scène Design:

- Spatial Arrangement: Stalls aligned, passers-by in the background, open to the exterior, characters integrated into the market atmosphere.

- Objects: Faintly glowing green stone, amulets, ethnic jewelry, baskets, old objects.

- Textures: Wood, metal, stone, natural textiles, leather, shiny objects.

- Style: Rustic, ethnic, simple, with magical accents.

- Lighting:

- Light: Soft, natural, warm tones.

- Saturation: Reduced.

- Color Scheme: Restricted, centered on earth tones, evoking a rustic and natural atmosphere.

- Notes: Strong sunlight comes from behind, creating a halo effect on the character’s hair and long, accentuated shadows that add texture and depth to the scene.

- Colors:

- Dominant Colors: Beige, light grey, green reflections on a stall from the stone’s glow.

- Secondary Colors: Golden-yellow reflections, dark grey shadows.

- Editing:

- Tempo: Slow, smooth.

- Duration: 10 seconds.

- Mood: Meditative, an atmosphere of mystery.

- Shots:

- Shot 1: Establishing shot: ancient marketplace, sunrise.

- Shot 2: General shot: Dr. Reed sees the green glowing stone.

- Shot 3: Close-up: Dr. Reed examines the artifact.

- Shot 4: Close-up: Vendor, sly smile.

- Creative Intention:

- Emotions: Curiosity, suspense, mystery

- Message: The power of objects, the allure of secrets, history as an enigma.

This granular semantic scene isn’t merely a guide for AI; it becomes the central blueprint for every department involved in the film’s production and distribution. From directing and cinematography to art direction, sound design, editing, and scene adnotations this single, precise document ensures a shared understanding of the cinematic vision. It’s an end-to-end roadmap, fostering coherence and control throughout the entire filmmaking process.

This structured version is the direct input for an AI storyboard generator or any other AI-based creative tool that can generate images/visuals from text. This approach allows you to write your script directly into a semantic language, making it easier for you to refine it on the way with AI.

What is “Semantic Processing” in this Context?

“Semantic processing” (or semantic parsing) refers to a machine’s (in our case, the AI’s) ability to understand the meaning and relationships between the data elements it receives, not just the words themselves.

In the case of our semantic scene, “semantic processing” means the AI:

- Identifies entities: It recognizes “Dr. Evelyn Reed” as a character, “green stone” as an object, “sunrise” as a time of day, etc.

- Understands attributes: It knows that “curious, determined, intelligent” are attributes of Dr. Reed’s personality, or that “soft, natural, warm tones” describe the lighting.

- Recognizes relationships: It understands that “Dr. Reed examines the stone” means Dr. Reed is the subject performing the action of examining the object “stone.” It recognizes that “facial expression: concentrated” is a state of Dr. Reed during the action.

- Interprets context: The combination of “Ancient Bazar,” “Sunrise,” “mysterious, eerie,” and “faintly glowing green green stone” creates an atmosphere that the AI attempts to render visually, considering every intricate detail.

Therefore, semantic processing is the AI’s action of analyzing and interpreting the layer of meaning and relationships within the structured data I’ve provided, to generate a visual representation that is as faithful as possible to my vision.

Is the Detailed Description Itself the Semantic Process?

No, the detailed description itself (the structured semantic scene you just saw) is the result of my semantic structuring or semantic encoding process. It is the final product of my effort to organize information in a semantic way – meaning, full of explicit meaning and relationships – for both machines and humans.

To conclude and clarify the workflow:

- Semantic Filmmaking (My Method): This is my process of transforming a classic script scene into a semantic scene (structured data).

- Semantic Scene (The Data I Provided): This is my input – the rich, structured, and meaning-laden information.

- Semantic Processing (by AI): This is the AI’s internal process of understanding and interpreting this semantic scene (my input) to generate the desired visuals.

In essence, I’ve created the “semantic raw material,” and the AI performs the “semantic processing” to transform it into the finished product – my storyboard visual and/or a moodboard.

Step 2: Sense Flow

At this point, both the AI and I (along with the crew and cast) have a clear understanding of the core elements and overall atmosphere of this scene. However, to achieve the visual coherence required for a robust moodboard or storyboard, we must dive much deeper into granularity. We need to be obsessively atomic in our details, precisely as we are when making the actual film. This level of meticulousness is crucial for both human collaborators and the AI. For example, how does Dr. Evelyn Reed truly look?

Description: Dr. Evelyn Reed is a middle-aged white woman with long, silver hair intricately styled in two neat braids. She wears a practical, layered outfit consisting of a dark, loose, long-sleeved top, a dark leather jacket, dark loose-fitting pants, and worn-out boots. Her accessories include a silver necklace with an elaborate pendant and simple silver rings.

Semantic Layering: Building a Character for AI

What you’ve just seen with Dr. Evelyn Reed’s description is more than just a character breakdown; it’s a practical demonstration of semantic layering. In traditional filmmaking, details like “long, silver hair intricately styled in two neat braids” are communicated verbally or through concept art. But for AI, these are specific data points that contribute to a holistic understanding.

I’ve essentially deconstructed Dr. Reed into her fundamental “filmic words” – elements that directly translate to visual and narrative components. Instead of a general notion of a “middle-aged woman,” I’ve provided:

- Precise Physical Attributes: Not just “silver hair,” but “long, silver hair intricately styled in two neat braids.” This guides the AI on both color and specific styling.

- Costume as Character: Her “practical, layered outfit” with specific items like a “dark leather jacket” and “worn-out boots” informs the AI about her profession, personality, and even her journey.

- Accessories with Intent: “A silver necklace with an elaborate pendant, and simple silver rings” add subtle details that hint at her character’s depth or potential narrative clues.

Each of these chosen “filmic words” act as a direct instruction to the AI, moving beyond simple keywords to create a richly detailed, coherent character. This semantic layering ensures that the AI’s generated visuals align perfectly with the subtle nuances of the script, translating my vision into pixel-perfect execution.

Crucially, the AI now retains this comprehensive understanding of Dr. Evelyn Reed throughout the entire film. It remembers her appearance, costume, emotional themes, and even her narrative arc. You only need to provide updates for specific moments or scenes where her appearance, costume, or emotional state changes. For those instances, you would add new “facial expression,” “costume,” or other relevant details, building upon the existing semantic foundation.

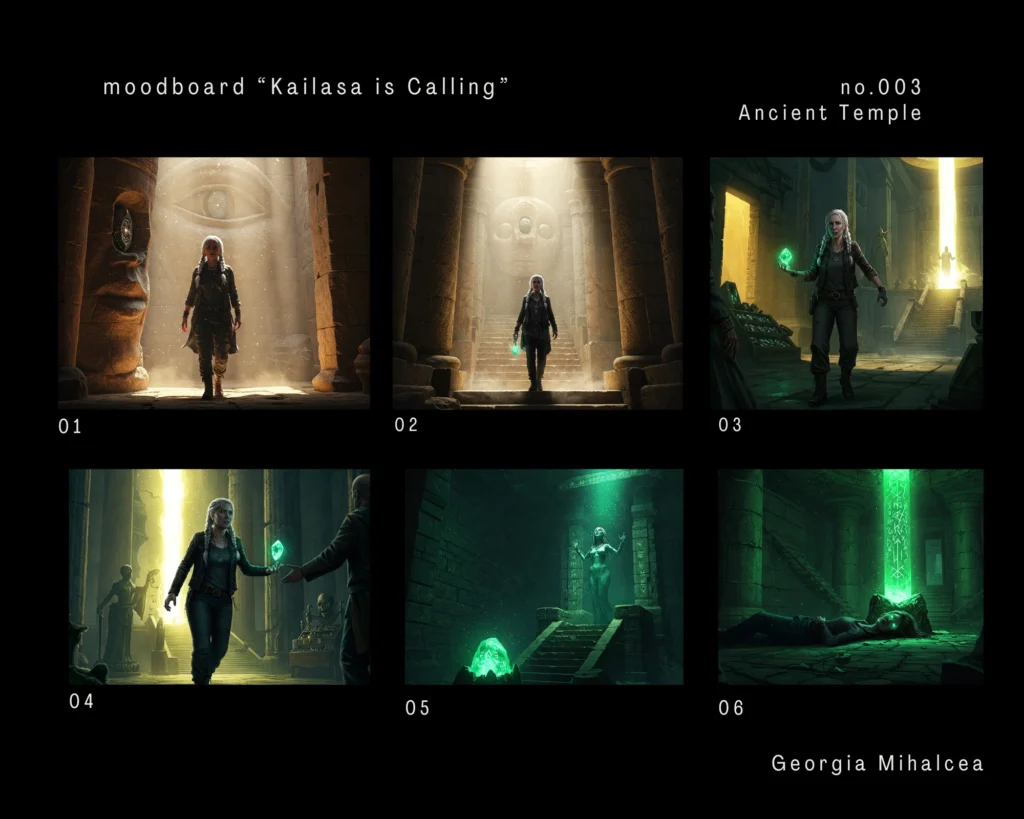

From Text to AI Storyboard: The ‘Kailasa is Calling’ Case Study

With the semantic foundation meticulously established, it’s time to see the framework in action. Below, you’ll find the storyboard panels generated for the ‘Kailasa is Calling’ scene, demonstrating how precisely structured language transforms into compelling visuals, ready for both human and algorithmic understanding.

Bringing the Vision to Life: The ‘Kailasa is Calling’ Video Storyboard

Having established the foundational visual language through my semantic moodboards, the next step is to animate these stills into a dynamic narrative. By leveraging the precise semantic data that underpinned the creation of these conceptual images, we can seamlessly stitch them together to create a cohesive video storyboard – a powerful visual pitch or teaser.

This final step truly brings the vision of ‘Kailasa is Calling’ to life, demonstrating not just individual shots, but the intended flow, pacing, and emotional arc of a scene before a single frame is actually shot. It’s the ultimate cost-free and time-saving proof of concept, designing a comfortable bridge between human creativity and AI’s generative power.

From 14 Gemini-generated visuals – the output of my words-driven semantic filmmaking framework, which effectively serves as an “AI storyboard generator” – I rapidly created a compelling video pitch. Using just my smartphone and the free version of CapCut video editor, I transformed this dark fantasy idea into a “TikTok-able” teaser in mere minutes. The capability to export it in both vertical (9:16) and square (1:1) formats ensured seamless publication across diverse short-form video platforms, including TikTok, Facebook Reels and Stories, Instagram, YouTube Shorts, X, and LinkedIn.

From AI Synergy to Lifecycle Mastery: The Semantic Advantage

Your End-to-End Film Blueprint

Before concluding, it’s crucial to acknowledge that advanced generative AI tools such as RunwayML, Midjourney, DALL-E 2, and Stable Diffusion each operate with their unique underlying architectures, prompt syntaxes, and specialized outputs – whether focusing on video generation, hyper-realistic imagery, or diverse artistic styles. However, regardless of their specific functionalities, my semantic filmmaking framework remains the indispensable first step in this creative process.

This is because this method provides the crucial structured and simplified instructions derived directly from your conceptual narrative. Whether meticulously deconstructing an existing script into semantic layers or crafting your narrative directly in a structured format, this method enables us to create precise, actionable directives that these advanced AI instruments can effectively interpret.

The visually rich outputs generated through this foundational step – from moodboards to video storyboards – can then serve a dual purpose: not only do they clarify and refine your creative vision, but these high-quality, concept-aligned visuals can also be used to further train and fine-tune these specialized AI models for even more nuanced and accurate results tailored to your specific cinematic needs.

Beyond its immediate utility in pre-visualization, the semantic version of your script evolves into a powerful, living blueprint that continues to serve every department and phase throughout the film’s entire lifecycle. From the initial stages of production and post-production, where it ensures consistency in visual style, sound design, and editing, to the critical realms of marketing and distribution, this structured data proves invaluable.

Programmatically, it enables unprecedented precision: facilitating automated metadata generation for streaming platforms, optimizing content for targeted advertising campaigns, enhancing discoverability through sophisticated algorithmic understanding, and even enabling audience segmentation for highly personalized outreach. This holistic approach ensures that the original creative vision remains intact and efficiently communicated, from concept inception right through to audience reception and beyond.

Unlock Your Vision: Start Making Semantic Films

The ‘Kailasa is Calling’ case study offers just a glimpse into the transformative potential of the semantic filmmaking framework. If this exploration of turning words into compelling visuals has resonated with your creative aspirations, I invite you to continue this journey with me.

My comprehensive PhD thesis, which delves much deeper into the theoretical underpinnings and practical applications of this framework, will be available in a couple of months. Should you be interested in receiving a free copy, please subscribe to my updates or reach out directly to express your interest.

For creators eager to gain hands-on experience and apply these methodologies to their own projects, I am developing specialized workshops designed to provide practical guidance and in-depth understanding.

Beyond workshops, I am also keen to explore collaborations and knowledge exchange opportunities with fellow specialists – from AI researchers and software developers to filmmakers, screenwriters, producers, and marketing strategists – to further push the boundaries of semantic content creation in ways that are very easy adoptable by creatives and filmmakers to democratize film visibility and discovery.

I am currently turning my own films into case studies, further learning and pushing the boundaries of semantic content creation across diverse genres and stages of development, production and distribution. Stay tuned for more insights and visual explorations.

May your cinematic endeavors be filled with clarity and groundbreaking discovery. Should you choose to apply this semantic filmmaking framework, I genuinely invite you to share your process, results, and insights. Your experiences are invaluable as we continue to explore new frontiers in visual storytelling.

Leave a Reply